Problem

Extreme requirements require interesting solutions. Sometimes there is a need to come up with hybrid solution, that not always seems to be beautiful on first sight. One example is a Message Locker solution.In service oriented architecture, application consists of many services that interact with each other.

Inevitably comes a need to fight high latencies caused by remote calls and necessary serialization/deserialization ( in a huge chain of dependent services calls, where each call results in a network hop with required fees like data marshaling and passing data through the network, adds at least a few milliseconds extra for each call.)

Service that requires to gather output from multiple dependencies to do its job is an aggregating service.

Such service needs to be smart at how it calls dependencies. If they are called one by one, then their latencies would be accumulated. The most obvious way to prevent this is to call each dependency in parallel. Now, service own latency would be defined mostly by its slowest dependency. In this case, we say that slowest dependency is in the critical path.

Aggregating service isn’t complex because it needs to call multiple services in parallel. And usually, there is simple way to avoid creating another service, if only business value it adds is aggregating output of multiple dependencies.

But, aggregating service becomes complex when:

- It adds complex logic on top of the data returned by dependencies

- It has to be sophisticated at orchestrating calls to many dependencies.

Optimal call of dependencies is often the most important thing to do when fighting high latencies. And thus, eventually comes a need to have Aggregating service, that can call multiple dependencies in a savvy way.

But, even when there is an aggregating service in use already, inevitably comes a need to fight high latencies. And there are only so many ways this can be done:

- decrease latency for each dependency in the critical path (often by pulling dependencies of own dependency, and call them first)

- call dependencies in even smarter way.

This post stops on the 2nd way. If aggregating service already parallelizes calls to dependencies as much as possible and there is no way to make it even better, then, to be honest, not much can be done anymore.

Seriously, when service A needs to call dependency B so it can call dependency C later, what else can be done to save extra 10 ms you need that much?

That’s where Message Locker comes useful. It goes to a bit nasty territory to allow save additional milliseconds in aggregating service.

Message Locker

"Message Locker" means a Locker for a Message. Service allows to store a message in the some kind of locker, so only specific client can grab it. If message is not received during certain period, message becomes unavailable.Message Locker is a distributed service that stores all the data in the memory. Client that sends a message into the locker is called sender. Client that receives message from locker is called receiver.

Each message is stored in the locker using a unique random key. When sender puts a message into the locker, it also provides additional attributes, like:

- TTL - time to store the message in the locker,

- Reads - number of times the message can be received.

Message would be removed from the locker whenever received for defined number of times or once its TTL expired. These rules prevent Message Locker to be bloated with obsolete messages.

Even after message was removed, Message Locker is still aware of it previous presence. Whenever receiver tries to get evicted message, it gets an error immediately.

In case receiver tries to get a message that is not evicted yet, it is returned to the receiver, and number of reads is increased. This approach doesn’t handles retries properly though.

In case receiver tries to get a message that is not sent yet, then Message Locker will hold the request until message becomes available or timeout happens.

How to use Message Locker?

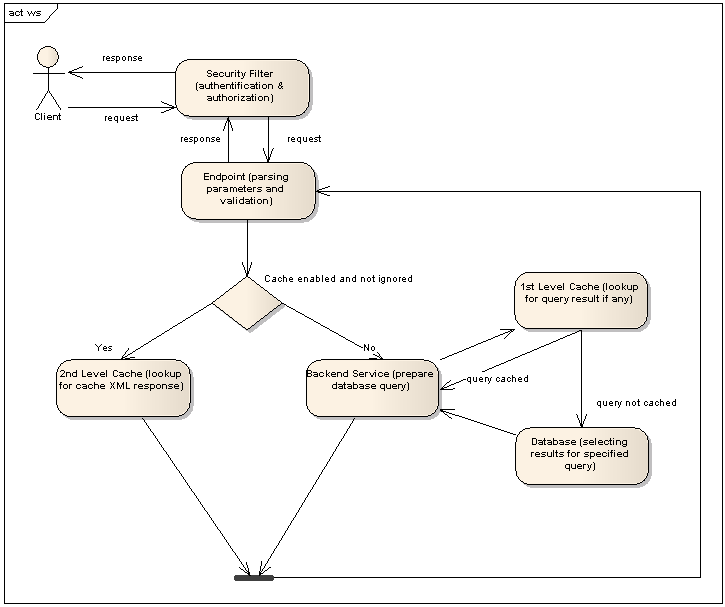

Given 3 services A, B and C. Service A is an aggregator service, that calls multiple other services, among them services B and C. Service B has to be called before service C, as its output is part of input for service C. Service A also uses output of service B for own needs later as well.Normally, service A would call service B, wait for reply and then call service C. During this workflow, service A needs to do following work before it can call C. This extra work becomes part of critical path:

- wait for reply from service B

- read reply from service B

- construct and call service C.

This is where Message Locker becomes helpful. Workflow is now changed: service A calls service B with key K, and in parallel calls service A with key K, B puts its reply into MessageLocker using key K, service C receive this reply using key K. Service A also receives service B’s reply from Locker using key K, and does this in parallel with service C call.

In this case, there are following notable changes:

- time to construct and call service C happens in parallel with call to service B, and as such is removed from critical path

- time to deserialize request and do necessary initial work by service C is also execute in parallel with call to service B, and as such is removed from critical path

- time to deserialize reply from service B in service A also happens in parallel with call to service C, and as such is removed from critical path

- time to call to Message Locker, receive and deserialize received data by service C are added to critical path. This would eliminate savings added by #2.

Using Message Locker also adds complexities:

- Service A, B and C need to be integrated with Message Locker

- Service A or B needs to know how many times message would be received from locker or what timeout to use in order to not overload Message Locker with not need message and not cause issues with message being removed to fast.

Why not use existing solutions like...

Message Locker by itself is very similar to well known existing solutions: Message Broker and Distributed Cache. Although similarities are strong, there are a few differences, that make Message Locker to stand out for its own very specific use case.Message Broker?

Message Broker would usually have a predefined list of queues. Producers would send messages to the queue and Consumers would consume. It is possible to create temporary queue, but it is usually expensive operation. Message Broker usually assumes processing latencies are less important than other traits, like persistence or durability or transactionality.In this case Message Broker can’t be a good replacement for Message Locker.

Distributed Cache?

Message Locker is more like a distributed cache, with additional limitations. Message is stored only for 1 or few reads or very limited amount of time. Message is removed from locker as soon as it becomes “received”.In ordinary caching, it is expected that content becomes available for much longer period than it is in Message Locker.

Summary

Message Locker is a way to decrease latencies in aggregation services by enabling additional parallelization. This is possible, as dependency between services are organized through a proxy - Message Locker. It holds the replies from dependencies and provides them to the receiver as soon as they are available. This allows to further hide expensive operations: network call and serialization/deserialization.This comes with additional complexities:

- Right value, for timeout and number of reads to evict, can be error prone to define,

- Message Locker development and support can be cumbersome as well,

- Restructuring services to benefit from Message Locker.

But when there is no other choice and latencies had to be increased, Message Locker could be a solution.